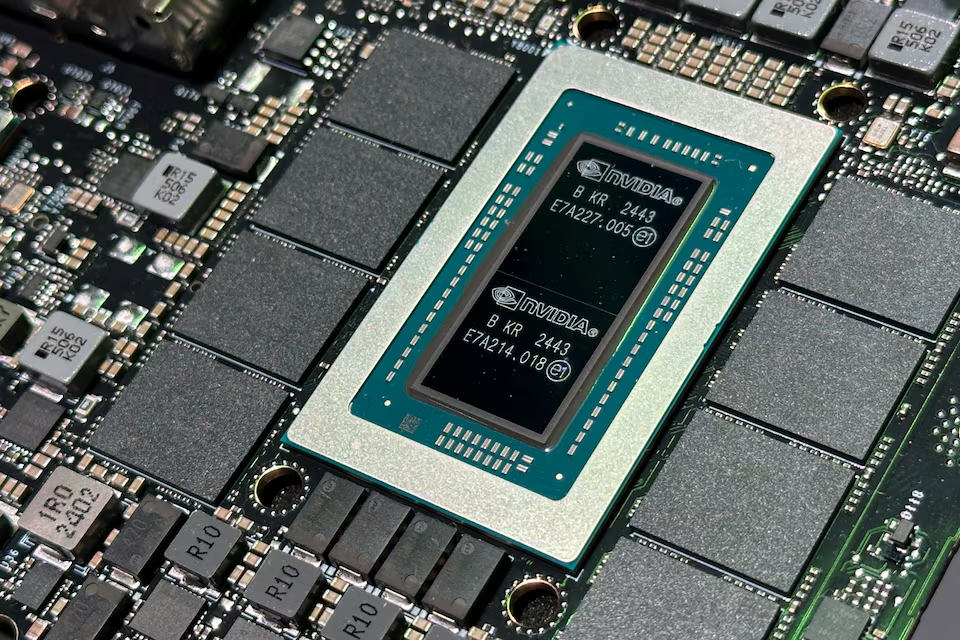

New data released on Wednesday, June 4, 2025, confirms that Nvidia’s (NVDA.O) newest chips are making significant advancements in the crucial area of training large artificial intelligence (AI) systems.

The fresh benchmarks indicate a dramatic reduction in the number of chips required to train complex large language models (LLMs). This efficiency gain is vital for the AI industry, which constantly seeks to optimize the intensive computational processes behind cutting-edge AI.

MLCommons Benchmarks Highlight Performance Gains

MLCommons, a non-profit organization dedicated to publishing standardized benchmark performance results for AI systems, provided the new data.

Comprehensive AI Training Data

The results detail how chips from various manufacturers, including Nvidia and Advanced Micro Devices (AMD.O), perform during the AI training phase. During training, AI systems are fed vast quantities of data to learn patterns and make predictions. While much of the stock market’s recent attention has shifted towards the larger market for AI inference (where AI systems handle user queries), the number of chips needed for training remains a critical competitive factor.

This is particularly true as companies like China’s DeepSeek claim to develop competitive chatbots using significantly fewer chips than their U.S. counterparts.

First Benchmarks for Large Models

Crucially, these results represent the first time MLCommons has released data specifically on how chips perform when training massive AI systems. An example provided is Meta Platforms’ (META.O) open-source AI model, Llama 3.1 405B. This model possesses a sufficiently large number of “parameters” to serve as an indicator of how chips would perform on the most complex training tasks globally, which can involve trillions of parameters. This benchmark provides a real-world proxy for the capabilities of these advanced chips.

Nvidia Blackwell’s Dominance in Training Speed

Nvidia’s latest generation of chips, Blackwell, demonstrated remarkable performance in these benchmarks.

Double the Speed of Previous Generation

Nvidia and its partners were the sole entrants to submit data for training such a large model. The results unequivocally showed that Nvidia’s new Blackwell chips are more than twice as fast as their previous generation Hopper chips on a per-chip basis. This significant leap in performance translates directly to faster AI development and reduced computational costs.

Dramatic Reduction in Training Time

In the fastest recorded results for Nvidia’s new chips, a cluster of 2,496 Blackwell chips completed the demanding training test in an astonishing 27 minutes. To achieve a faster time, it required more than three times that number of Nvidia’s prior generation chips. This illustrates the profound efficiency gains and computational power packed into the Blackwell architecture.

Industry Trends: Smaller Clusters for Greater Efficiency

The AI industry is also seeing an evolution in how large-scale training tasks are managed.

Subsystems Over Homogeneous Clusters

During a press conference, Chetan Kapoor, Chief Product Officer for CoreWeave, a company that collaborated with Nvidia on some of the benchmark results, discussed an emerging industry trend. He noted a shift towards “stringing together smaller groups of chips into subsystems for separate AI training tasks.”

This approach contrasts with the traditional method of creating massive, homogeneous groups of 100,000 chips or more. Kapoor emphasized that this methodology enables ongoing acceleration and reduction in the time required to train “crazy, multi-trillion parameter model sizes,” highlighting a strategic optimization in AI infrastructure deployment. This focus on modular, efficient clusters helps to tackle the ever-growing computational demands of cutting-edge AI models.